Liu M Y, Breuel T, Kautz J. Unsupervised image-to-image translation networks[C]//Advances in Neural Information Processing Systems. 2017: 700-708.

根据couple theory,基于joint分布可以很容易得到marginal分布,但利用marginal分布得出joint分布十分困难。

因此,本篇论文提出UNIT (UNsupervised Image-to-image Translation) 框架 (Figure 1),利用shared-latent space假设协助从两个domain的marginal分布 ($p_{X1}(x1)$, $p_{X2}(x2)$)中学习它们之间的joint分布。

- UNIT结构涉及到Coupled GAN、VAE、Cycle consistent、weight-sharing constraint.

- 此外,UNIT结构也能够应用到domain adaption任务上。

1. Assumptions

- Unsupervised

- Marginal $p_{X1}(x1), p_{X2}(x2)$

Supervised

- Joint $p_{X1,X2}(x1, x2)$

假设存在shared-latent space,space中的shared latent code z能够同时用于恢复两个domain的图片。

- 确保满足$F_{1->2}=G2(E1(x1))$和$F_{2->1}=G1(E2(x2))$的一个必要条件是cycle consistent,即shared-latent space假设暗含了cycle consistent假设。

- 在shared-latent space的基础上,进一步假设shared intermediate representation $h$.

- $G1=G_{L1}*G_{L2}$

- $G2=G_{L2}*G_{H}$

- $G_{H}: z->h$, high level

- $G_{L}: h->x$, low level

- z可看作是场景的high-level representation (car in front, trees in back).

- h可看作是z的特定实现 (car occpy the following pixels).

- $G_{H,L}$可看作是actual image formation (tree lush green in sunny domain, dark green in rainy domain).

2. Framework

6 subnetworks (Table 1)

3. VAE

- Reparameterization

4. Weight-sharing

- 基于shared-latent space假设,enforce weight-sharing约束到两个VAE上:last few layers of E, first few layers of G.

- 但weight-sharing约束并不能确保两个domain中对应的图片能得到相同的latent code. 因为对于unsupervised方式而言,两个domian中不存在对应的pair能够训练网络输出同样的latent code.

- 然而,能够通过对抗训练将两个domain中的pair映射到同样的latent code上。

5. Stream

- Translation stream

- $X1->z1->X2$

- $X2->z2->X1$

- Reconstruction stream

- $X1->z1->X1$

- $X2->z2->X2$

6. GAN

只将对抗训练应用到translation stream上。

7. Cycle-consistent

- Cycle-reconstruction stream

- $X1->z1->X2’->z2->X1’$

- $X2->z2->X1’->z1->X2’$

- To further regularize the ill-posed unsupervised image-to-image translation problem.

8. Loss

- 交替update

- 更新D1, D2 (adversarial loss)

- 更新G1, G2, E1, E2 (VAE loss + CC loss)

9. VAE loss

- 用Laplacian分布model pG1,pG2.

- 最小化Negative log-likelihood等价于最小化image和reconstructed image绝对距离。

10. GAN loss

11. CC loss

12. Experiments

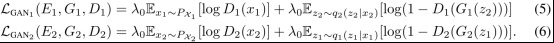

- Shallow D导致较差性能 (Figure 2b)

- Weight-sharing层数影响小 (Figure 2c)

- Negative log likelihood权重越大,acc越高 (Figure 2c)

- Ablation study (Figure 2d)

- Remove weight-sharing约束和reconstruction stream,结构变成CycleGAN.

- Remove cycle-consistent约束.

- Street image (Figure 3)

Sunny to rainy, data to night, summery to snowy. - Synthetic to real (Figure 3)

Synthetic images SYNTHIA dataset.

Real images Cityscape dataset.

- Dog breed conversion (Figure 4)

ImageNet, 利用模板匹配算法extract head regions.

- Cat species conversion (Figure 5)

ImageNet.

- Face attribute (Figure 6)

CelebA dataset. With an attribute constituted the 1st domain, while those without the attribute constituted the 2nd domain.

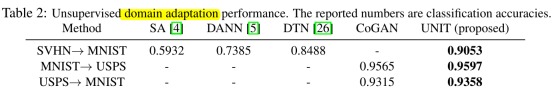

13. Domain Adaption

- 用一个domian中的labeled数据训练分类器,并用将该分类器应用到另一个domain数据集上,该数据的labeled在训练中没有使用到。

- 利用UNIT进行多任务学习:

- Translate between source domain and target domian.

- 利用source domain的D提取source domain数据的特征。

- 共享D1, D2 high-level层的权重。

- 最小化D1和D2 highest layer提取feature之间的L1 loss.

实验发现spatial context information useful (RGB+normalized xy coordinates).

14. Network Architecture